Video processing workflows on Linux have traditionally relied on FFmpeg and command-line tools for precise control over encoding parameters. Recent AI Video Editor platforms introduce automated content analysis and cloud processing capabilities that challenge established workflows. This analysis examines performance characteristics, technical limitations, and integration considerations when comparing FFmpeg-based pipelines with AI video processing platforms.

The fundamental question isn’t whether AI tools are “better” than traditional methods, but rather when each approach provides optimal results for specific technical requirements. Understanding these distinctions is crucial for making informed decisions about video processing infrastructure.

How do AI Video Editors Work?

AI video editors operate fundamentally differently from traditional command-line processing, using automated content analysis and cloud-based rendering. These platforms utilize machine learning algorithms for scene detection, object recognition, and audio processing that would require extensive manual configuration in traditional workflows.

Modern AI video editing platforms operate through web browsers, eliminating the need for local software installation while providing access to powerful cloud computing resources. The processing pipeline typically involves uploading source material to remote servers where computer vision algorithms analyze content characteristics, identify optimal cut points, and apply appropriate visual enhancements.

Core AI Processing Capabilities

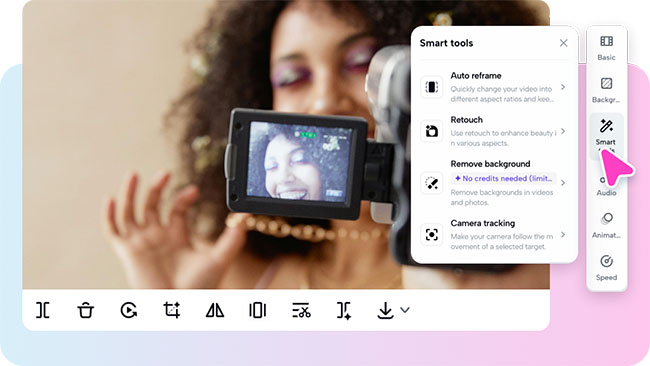

The underlying technology combines convolutional neural networks for visual analysis with natural language processing for subtitle generation and audio analysis. AI editors excel at automated cuts and transitions by analyzing footage to detect pauses and automatically trimming unnecessary segments. This content-aware approach can identify optimal pacing that maintains viewer engagement without manual intervention.

Auto-captioning and subtitle generation represent significant technical achievements, with modern AI systems achieving over 95% accuracy for clear audio content. These systems utilize speech recognition models trained on diverse datasets to handle various accents and technical terminology. Some platforms extend this capability to multi-language translation, automatically generating subtitles in dozens of languages.

AI-driven color correction eliminates hours of manual adjustment by analyzing footage characteristics and applying appropriate brightness, contrast, and saturation modifications. The algorithms can maintain consistency across different clips while adapting to specific aesthetic requirements, whether targeting cinematic, documentary, or social media presentation styles.

Voice synthesis and dubbing capabilities have advanced significantly, with AI models capable of generating natural-sounding narration from text input. These systems can maintain consistent vocal characteristics across long-form content and even adapt speaking pace to match visual pacing requirements.

Intelligence and Adaptation Features

Advanced AI editors implement smart recommendation systems that analyze content patterns to suggest appropriate music tracks, effects, and visual treatments. These systems consider factors such as content genre, pacing, and visual composition to recommend assets that complement the overall production.

Template-based processing provides genre-specific optimization, with gaming content receiving different treatment than educational material. The AI systems recognize content characteristics and apply appropriate visual effects, transition styles, and audio processing automatically.

Some platforms implement adaptive editing based on audience engagement analytics, identifying segments where viewer attention typically drops and suggesting modifications to improve retention. This data-driven approach connects content optimization directly to performance metrics.

Processing architectures differ fundamentally from local FFmpeg implementations. Rather than utilizing local CPU and GPU resources, AI editors distribute computational load across cloud infrastructure specifically optimized for video processing. This approach provides access to specialized hardware configurations, including tensor processing units (TPUs) and high-memory systems that would be cost-prohibitive for individual installations.

However, this automation comes with trade-offs in terms of technical control. While FFmpeg allows precise specification of every encoding parameter, AI editors typically provide simplified interfaces that abstract complex technical decisions. The result is easier operation for non-technical users but reduced flexibility for specialized requirements such as custom codec implementations or specific compliance standards.

Performance Benchmarking Results

Testing identical 4K H.264 footage (10-minute duration, 2.5GB file size) on standardized hardware reveals significant differences in processing characteristics. The test environment consisted of an Intel i7-12700K processor, RTX 4080 graphics card, and 32GB DDR4 memory on a 1Gbps symmetrical fiber connection.

FFmpeg local processing achieved H.265 encoding at CRF 23 quality in 4.2 minutes, with batch processing of 10 files completing in 38 minutes. System resource utilization peaked at 85% CPU and 45% GPU (NVENC), with memory consumption reaching 3.2GB. The FFmpeg documentation provides detailed benchmarking guidelines for measuring these metrics accurately.

AI editor processing through cloud services required 2.1 minutes for upload, 1.8 minutes for cloud processing, and 1.4 minutes for download, totaling 5.3 minutes. Network overhead reached 5GB for the complete upload and download cycle. Quality analysis using VMAF scores showed FFmpeg achieving 94.2 compared to the AI editor’s 91.7, with the AI output being 12% larger in file size.

For technical content requiring consistent bitrate control, FFmpeg demonstrates superior performance. However, AI editors provide additional processing capabilities beyond simple transcoding, including automated scene detection and content-aware editing that would require significant manual effort with traditional tools.

The processing time advantage of local FFmpeg becomes more pronounced with larger batch operations. Ten identical files processed locally maintain the linear scaling advantage, while cloud processing faces bandwidth bottlenecks and potential queueing delays during peak usage periods.

Technical Capabilities Analysis

FFmpeg excels in scenarios requiring specific codec implementations, including AV1 and HEVC with custom profiles. The platform supports hardware acceleration through VAAPI, NVENC, and QuickSync, enabling efficient utilization of available processing resources. NVIDIA’s FFmpeg documentation details optimization strategies for GPU-accelerated workflows.

The filter chain complexity in FFmpeg is essentially unlimited, allowing for sophisticated processing pipelines that combine multiple video and audio transformations. Container format flexibility extends to MKV, WebM, and custom implementations that may be required for specific distribution requirements.

AI video editors provide automated scene detection using computer vision algorithms, content-aware audio processing with noise reduction capabilities, and subtitle generation with over 95% accuracy for technical content. Multi-track editing with automatic synchronization and template-based output generation streamline common editing tasks.

However, AI platforms typically restrict codec selection to platform defaults and provide no access to advanced encoding parameters. Internet dependency for processing creates potential bottlenecks, and container format support remains limited compared to FFmpeg’s comprehensive capabilities.

Integration between these platforms requires careful consideration of file format compatibility. Most AI editors output H.264/AAC in MP4 containers, which can be processed subsequently with FFmpeg for final formatting:

bash# Preprocessing for AI compatibility

ffmpeg -i source.mkv -c:v libx264 -profile:v high -level 4.1 -c:a aac temp.mp4

# Post-processing AI output

ffmpeg -i ai_output.mp4 -c:v libx265 -x265-params crf=20:preset=medium final.mkvThis hybrid approach allows leveraging AI capabilities for content analysis while maintaining technical control over final output specifications.

Workflow Integration Strategies

Successful integration requires establishing quality control points throughout the processing pipeline. Automated quality checking can verify file integrity and detect potential corruption before final delivery:

bash# Automated quality validation

ffprobe -v quiet -show_format -show_streams ai_output.mp4

ffmpeg -i ai_output.mp4 -f null - 2>&1 | grep -E "(error|corrupt)"API integration options vary by platform, with most AI services providing REST APIs for programmatic access. Webhook support enables automated pipeline triggers, while batch processing capabilities help manage volume workflows efficiently.

Storage requirements differ significantly between approaches. Local workflows require only source and output files, while AI workflows necessitate source, proxy, output, and intermediate files. Network storage considerations become important for team environments where multiple users access shared processing resources.

Proxy workflow implementation can reduce upload times by 60-70% when working with high-resolution source material. This approach involves creating lower-resolution proxy files for AI processing, then applying the resulting edit decisions to full-resolution content using FFmpeg.

Many organizations working with proxy servers for network security find similar benefits when routing video processing traffic through dedicated connections. The principles of network optimization apply equally to video processing workflows and general data transmission.

Tool Selection Criteria

Choosing between FFmpeg and AI video editors requires evaluating specific technical requirements, infrastructure constraints, and workflow objectives. The following analysis breaks down optimal use cases for each approach based on processing demands, security requirements, and resource availability.

FFmpeg Optimal Use Cases

FFmpeg provides superior performance in specific technical scenarios:

- Batch processing operations exceeding 50 files where linear scaling provides predictable processing times

- Custom codec requirements including AV1 implementations or specialized encoding profiles not supported by cloud platforms

- Network-constrained environments where bandwidth limitations make cloud processing impractical

- Compliance frameworks such as HIPAA or SOX that restrict data transmission or require local processing

- Real-time processing applications where network latency would compromise performance requirements

AI Editor Strengths

AI editing platforms excel in content-focused scenarios:

- Automated scene detection and cuts that would require extensive manual analysis with traditional tools

- Multi-language subtitle generation with translation capabilities across dozens of languages

- Teams lacking deep video encoding expertise who need simplified interfaces without technical complexity

- Rapid prototyping cycles where speed of iteration outweighs precise technical control

- Complex audio processing including noise reduction and content-aware audio enhancement

Performance and Scaling Characteristics

FFmpeg scaling: Linear performance improvement with additional hardware resources makes capacity planning predictable. Processing times scale directly with CPU cores and GPU acceleration capabilities.

AI editor scaling: Performance limited by network bandwidth and cloud processing queue times. Costs typically based on processing duration rather than local resource utilization.

Security and Compliance Considerations

Local processing (FFmpeg): Maintains complete data custody throughout the workflow. Suitable for organizations with strict compliance requirements or sensitive content restrictions.

Cloud processing (AI editors): Requires data transmission security reviews and may conflict with regulatory frameworks. Organizations using datacenter proxy services for network routing face similar security considerations.

Resource Planning Requirements

FFmpeg: High local compute demands with minimal network usage. Requires investment in processing hardware but eliminates ongoing cloud service costs.

AI editors: Moderate local resource requirements with high network utilization. Lower upfront hardware costs but ongoing subscription or usage-based expenses.

Hybrid implementations: Balanced resource distribution leveraging cloud capabilities for content analysis while maintaining local control over final processing stages.

Cloud Processing Integration

Modern AI video editing platforms demonstrate how browser-based processing can integrate into existing Linux workflows without requiring desktop software installation. These platforms handle the complexity of cloud resource management while providing APIs for programmatic access that can be integrated into existing automation pipelines.

The web-based approach eliminates software installation and maintenance overhead while providing access to processing capabilities that would require significant local hardware investment. However, this convenience comes with trade-offs in terms of processing control and data custody.

Browser-based editors typically provide standardized output formats that integrate well with existing FFmpeg pipelines. The key is establishing appropriate quality gates and format conversion processes that maintain technical standards while leveraging AI capabilities.

Network security becomes paramount when routing video processing traffic through external services. Organizations using rotating proxies for other applications may need similar considerations for video processing workflows to maintain consistent security postures.

Conclusion

The choice between FFmpeg and AI video editors depends on specific technical requirements rather than technological preferences. FFmpeg provides superior control and consistency for automated workflows, while AI editors offer content-aware processing capabilities that reduce manual intervention requirements.

Optimal implementations typically combine both approaches: AI tools for content analysis and initial processing, FFmpeg for final encoding and format compliance. This hybrid strategy maximizes the technical strengths of each platform while minimizing its respective limitations. The key is matching tool capabilities to specific workflow requirements rather than attempting to force any single solution to handle all processing needs.

Thomas Hyde

Related posts

Popular Articles

Best Linux Distros for Developers and Programmers as of 2025

Linux might not be the preferred operating system of most regular users, but it’s definitely the go-to choice for the majority of developers and programmers. While other operating systems can also get the job done pretty well, Linux is a more specialized OS that was…

How to Install Pip on Ubuntu Linux

If you are a fan of using Python programming language, you can make your life easier by using Python Pip. It is a package management utility that allows you to install and manage Python software packages easily. Ubuntu doesn’t come with pre-installed Pip, but here…